Note: All content is shared with the permission of Ooma Inc.

Ooma Home Security is a contract-free DIY home security solution that is a competitor to other DIY home security products such as Ring and Arlo, as well as traditional security services such as ADT. Users purchase a base station and a number of sensors, which wirelessly connect to the base station. The system is controlled via a smartphone app that affords users the ability to call their local emergency services no matter where they are.

I led this study for two purposes: to uncover the sources of vaguely stated customer complaints about the setup process that could turn into negative customer reviews, as well as to help the Ooma product team gain better insight into how people use the product.

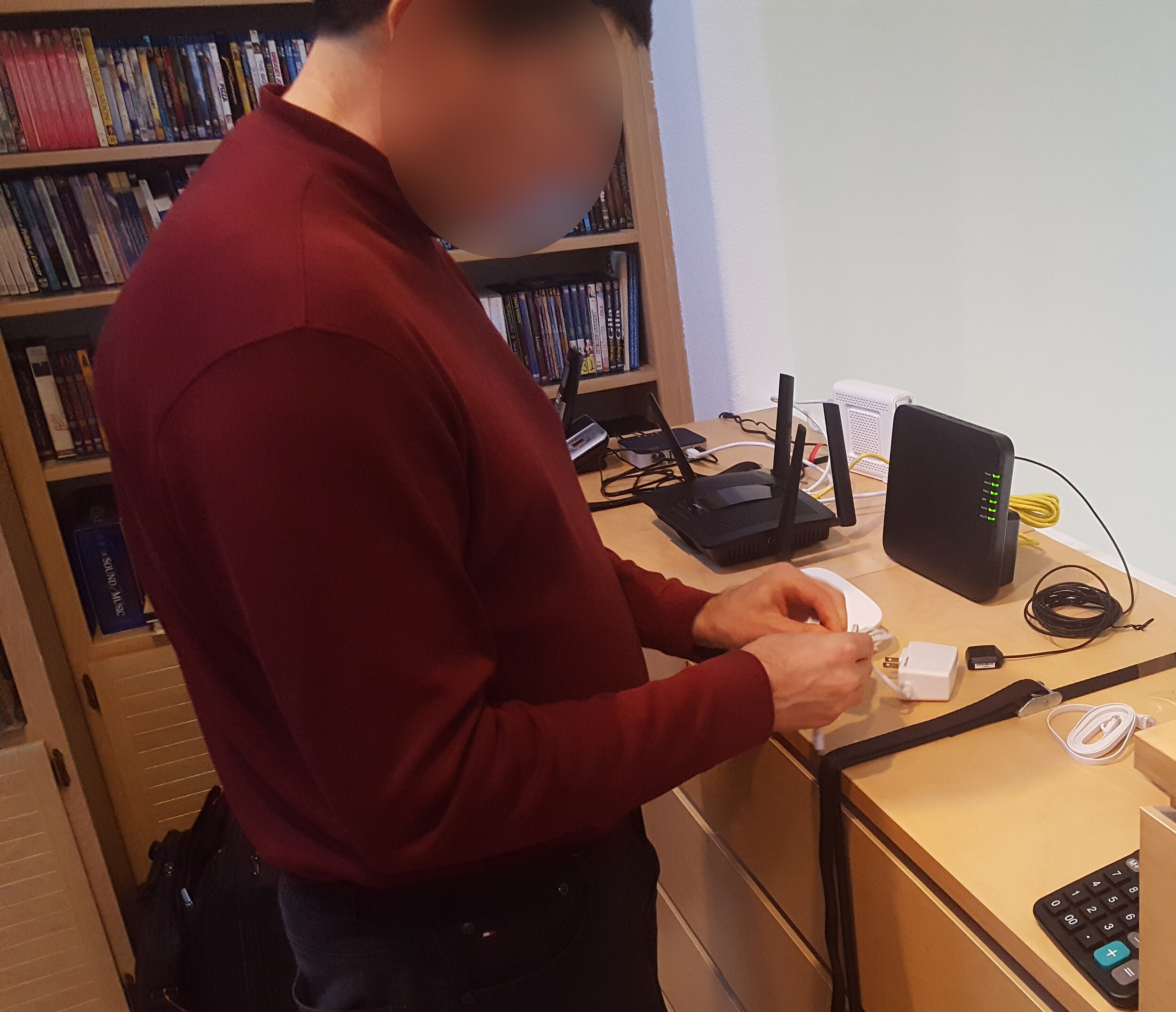

After developing and receiving approval for a testing plan, I recruited four internal Ooma employees who had not used the service before, compensating them with the hardware they would set up. We tested with two women and two men. Ages ranged from mid-20s to early 50s. We decided to hold the usability tests in the subjects’ homes because the methodology called for the subjects to install sensors on walls, door frames, and window frames. In-home studies also allow for greater subject comfort in a natural setting vs. the more artificial, clinical nature of a lab. By holding the sessions mid-day when there are usually less occupants present, it also reduced the chances of other variables occurring (such as family members needing something, deliveries, etc.) that can derail in-home studies.

Above: Two stills from video we took from one of the usability test sessions. Subject faces and computer screens blurred for privacy.

Subjects were given an Ooma Home Security starter kit, which included a base station, a motion sensor, and two door/window sensors.

The testing plan was meant to emulate the out-of-the-box experience as closely as possible with minimal assistance. Users were instructed to perform the following tasks:

Unbox the hardware

Activate the base station using the Ooma Home Security app

Connect the base station to an internet router

Wirelessly connect the supplied sensors to the base station

Mount the supplied sensors

Configure notifications, alerts, and modes using the Ooma Home Security app

During each of these areas of testing, we paid attention to our users’ complete actions (including their physical actions, their expressions, and what they said) to understand their experience with each step of the study. We also asked the subjects to rate each task from 1 to 5 (high) based on their experience.

Results and Findings:

All four users were able to set up their Ooma Smart Security systems and task ratings were considered acceptable by the team. However, we uncovered usability problems at each stage of the setup that could drive negative customer reviews. They, and their solutions, are detailed below.

Packaging:

The packaging was a hinged lid design with slotted tabs for each side of the box. Users had trouble opening the packaging because the tabs were not obvious. The product tray also did not hold the parts well, resulting in a messy appearance when opening the lid. We resolved this by revising the product tray and switching to a sleeved box package.

Documentation:

Most of the manuals went unread because of the setup instructions that were contained in the companion app. However, when users did read them, they found that they did not provide enough information. For example, during the boot process of the base station, a light flashes multiple colors according to status. The manual did not mention this occurrence, confusing users. The manuals were revised to better walk the user through each step and what to expect during setup. We also planned ways to guide users to the correct manual when there are multiple ones included, which is the case with starter kits. Once the manuals were revised, we distributed them to volunteer employees for feedback prior to finalization. Our volunteers reported that the manuals were much easier to read and more informative than past iterations. Looking back on this part of the study, I would have collected more quantitative data such as measured time reading the manuals and user ratings of the new content.)

Application:

When app requested location permissions, users declined them due to privacy concerns. Location permissions are integral to the app’s ability to arm and disarm the security system based on user location. We added a primer screen before the permission popup to explain why the app is requesting location permission.

When the Base Station goes through a software update process, the app displays a status saying the Base Station has lost connection to the internet, confusing users. An update is planned to explicitly inform users that the base station is going through a software update lasting X minutes.

Our test subjects had trouble grasping the concept of security modes, which allow sensors to respond differently based on the selected mode. The app displayed the current mode on the top of the screen, which our subjects did not pay attention to. To change modes, users had to swipe on the top of the screen, and this was not obvious. Senior Product Manager Anthony Hizon and I worked with an external development firm to design a new mode selector system based on test subject feedback.

Hardware:

The Door and Window sensor used small recessed dots on the connector parts to hint the proper part alignment. These turned out to be too hard to see and were replaced by more explicit arrows.

Special thanks to Anthony Hizon and Thad White for their assistance and note taking during these sessions, as well as their leadership with the Ooma Home Security project.